Key Takeaways

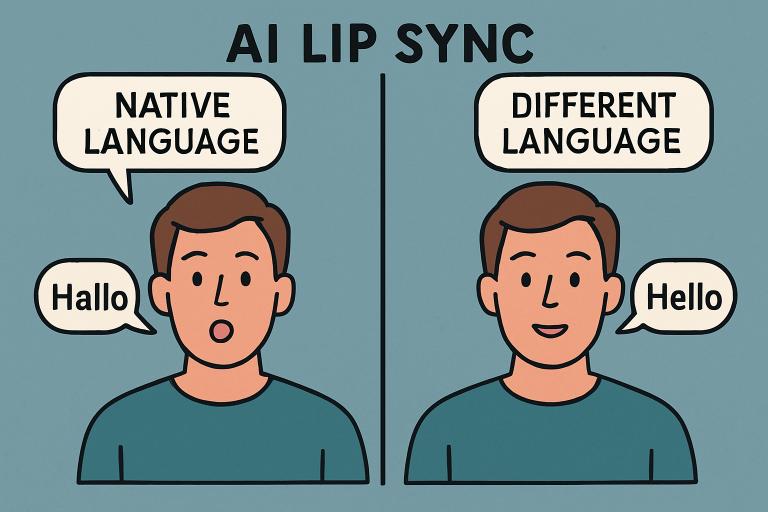

- AI-driven lip-sync technology is revolutionizing video content by enabling seamless multilingual translation and personalized messaging.

- Major platforms like Meta and YouTube are integrating AI lip sync features to enhance user engagement and accessibility.

- Innovations in AI lip sync are significantly reducing production time and costs for content creators.

Introduction

In the digital era, video creation is evolving at an unprecedented pace, thanks to breakthroughs in artificial intelligence. Among the most exciting advancements is AI-powered lip sync, which is rapidly transforming content localization and personalization by automating lip movement synchronization with dubbed audio. Modern lip sync AI tools now allow creators to deliver immersive, native-quality experiences to global audiences, without the high costs or delays of manual editing.

AI lip sync tools analyze voice, facial expressions, and even emotional tone to generate lip movements that match the new language track. This means creators and platforms can translate videos while maintaining a natural, authentic appearance, opening up new opportunities for content to cross language barriers and reach new markets. This shift is not just about convenience; it is redefining accessibility and user engagement in ways previously unimaginable. As more major platforms integrate AI lip sync, content creators can tap into vast multilingual audiences more efficiently than ever before. For viewers, it results in seamless localization without the distraction of mismatched audio and lip movements, making each video feel truly native. This transformation is setting a new standard for quality and reach within digital media. Notably, these advancements in AI are being championed by both established tech giants and innovative startups, fueling a race to perfect accuracy, realism, and ease of use.

The Rise of AI Lip Sync Technology

The leap in AI lip sync is rooted in advanced machine learning, where models process large datasets of audiovisual content to understand and replicate human speech patterns and mouth movements. Unlike manual dubbing, which requires frame-by-frame adjustments, modern AI systems can adapt to virtually any speaker in any language, preserving facial nuances and speaker style. Groundbreaking zero-shot models such as Lipsync-2 from Sync Labs demonstrate that minimal input data is sufficient for accurate, expressive lip synchronization. This has not only democratized high-quality dubbing but also exponentially sped up the workflow for media producers. Recognizing the immense potential to bridge language divides, major platforms have rushed to deploy AI-driven lip-sync features. Meta was among the first to roll out an AI translation and lip-sync tool on Facebook and Instagram, allowing creators to dub content with lip-synced translations—initially in English and Spanish. By aligning mouth movements with translated dialogue, these platforms help make content inclusive and engaging for users around the globe.

YouTube, another digital titan, is piloting its own AI lip sync system to help creators localize content more convincingly. The tool automatically adjusts mouth movements to match dubbed audio, making international uploads indistinguishable from original-language versions. These innovations give creators high production value without requiring language-specific reshoots. TechCrunch recently reported on the competitive momentum in this space as platforms vie to offer the most authentic viewing experience.

Benefits for Content Creators

- Faster Production:Eliminating manual editing dramatically reduces project turnaround time, enabling creators to respond quickly to trends and breaking news.

- Lower Costs:Automated workflows require less staffing and studio time, making professional-quality dubbing feasible for creators and brands of all sizes.

- Broader Global Reach:By producing credible multilingual versions, creators can amass audiences in countries where language may otherwise be a barrier to engagement.

The possibilities for viral outreach and cultural resonance are vastly expanded, helping brands and influencers tap into new markets with ease.

Technological Innovations Driving the Field

At the heart of recent advances are diffusion transformers and other state-of-the-art deep learning techniques, as seen in OmniSync’s universal lip synchronization model. These models process high-dimensional audiovisual data and generate realistic lip movements across varying scenes and speaker demographics. The result: videos that retain emotional expressiveness, subtle facial cues, and synchronization precision, regardless of the speaker’s original language or appearance.

Such breakthroughs are enabling not only real-time dubbing but also applications in live streams, gaming characters, virtual meetings, and more. The speed and quality of AI-driven lip sync are expected to keep improving as models train on richer datasets and compute power increases.

Applications Across Industries

- Education:E-learning platforms use AI lip sync to make instructional videos accessible in numerous languages, enhancing comprehension and inclusivity for global students.

- Entertainment:From streaming series to blockbuster films, studios can deliver authentic dubbed content, reducing reliance on subtitles and capturing more nuanced performances.

- Marketing:Brands create localized ads that speak directly and authentically to different linguistic and cultural demographics, personalizing campaigns for maximum impact.

AI lip sync tools are also being leveraged in healthcare for multilingual patient education videos and in enterprise training to deliver consistent messaging efficiently worldwide.

Challenges and Ethical Considerations

With new technology comes new concerns. The same techniques that enable powerful video dubbing can be co-opted for creating deepfakes and misinformation. This raises urgent questions about privacy, consent, and the authenticity of content. Further complicating adoption are issues of cultural accuracy, as translations must reflect not only words but also social and emotional context to avoid miscommunication or offense.

The industry is responding with increasingly robust detection tools and ethical frameworks, while experts advocate for transparency in labeling AI-generated translations and lip syncs to maintain public trust.

Future Outlook

The evolution of AI lip sync technology is far from over. Continued research is driving up quality, fluency, and accessibility while also expanding language coverage and minimizing lag in real-time applications. As standards emerge and best practices develop, seamless and ethical multilingual video production could become the default for digital creators in entertainment, education, and communications worldwide. The next frontier is integrating AI lip-sync with other generative AI media tools, promising truly universal, high-fidelity video personalization across every screen and device.

Conclusion

AI-powered lip sync technologies are at the forefront of a wave sweeping through video content creation and localization. By combining accuracy, speed, and multilingual support, they are opening unprecedented opportunities for creators, brands, and educators. The challenge now is to embrace these advancements while navigating the ethical landscape, ensuring this technology enhances communication, trust, and inclusivity for audiences everywhere.